Speech-based Sociability Assessment for Mental Health

The Problem:

Sociability is a transdiagnostic mental health construct implicated in several mental health disorders such as depression, psychosis, suicidality, etc. Existing tools for sociability assessment are mostly subjective self-report-based questionnaire. We are pursuing a first-of-its-kind research on using free-living audio for sociability assessment which could complement the clinical questionnaires. We leverage recent advancements in speech processing with innovations required to meet challenges of free-living conditions to build a clinically relevant solution.

Our Solution:

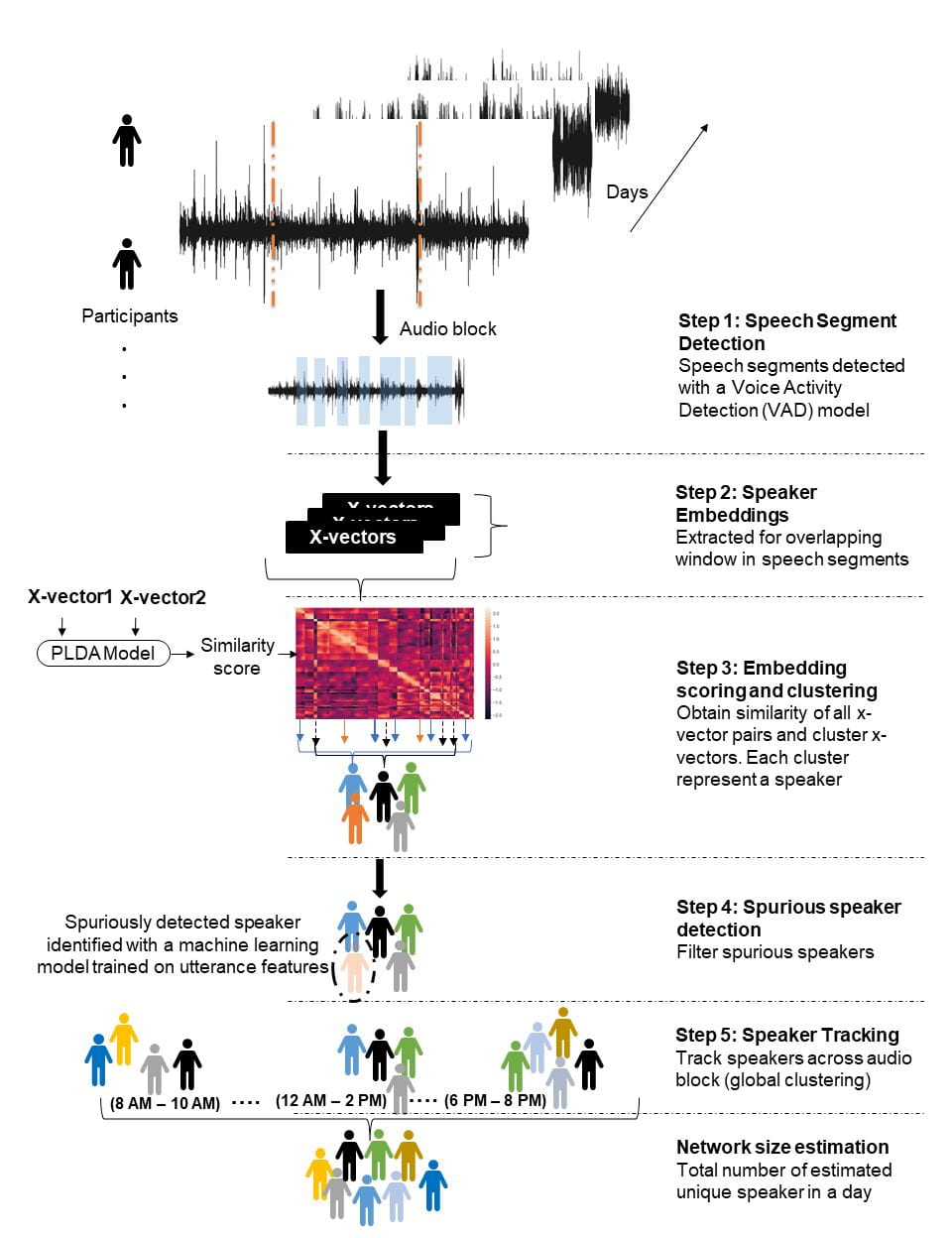

We developed a speech processing pipeline called ECoNet (Everyday Conversational Network Estimator). The pipeline uses a VAD model based on PyanNet architecture – learning optimal filters in the input SincNet layers – and performs the best compared to other VAD alternatives in processing free-living audio recordings. We used x-vectors for speech representation and unsupervised clustering similar to state-of-the-art speaker diarization pipeline. Additionally, to address the challenges of noise and speech variability commonly encountered in free-living conditions we developed a machine learning model for spurious speaker detection. We also developed an unsupervised speaker tracking model that can identify speakers across multiple blocks of audio in a recording period.

ECoNet being a generic speech processing pipeline to infer speaker dynamics in free-living audio can be adapted for different possible sociability markers of depression. So far, we have used/adapted ECoNet to infer social network size (number of people one interacts with in a day on average) and dyadic interaction pattern.

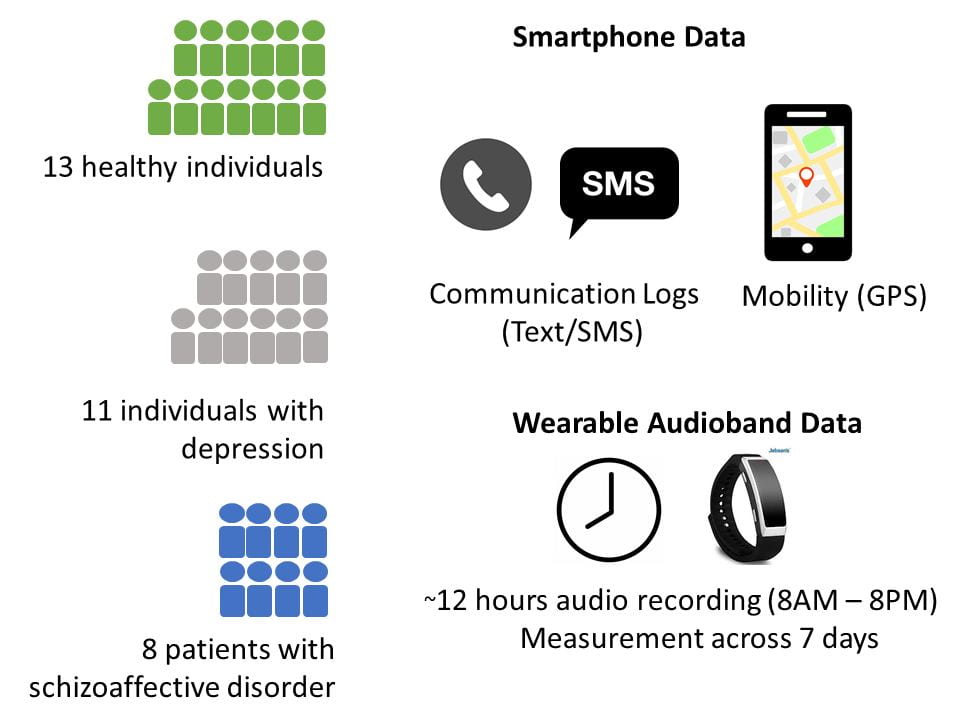

We conducted a clinical study with 32 participants comprising of healthy, depression, and psychosis participants to build and validate our solutions.

Results:

- Social Network Size and Mental Health: Social network size automatically inferred from multi-day multi-hour audio recording was found to correlate negatively with one’s depression severity. Significant difference in network size of depression and health group was observed; the psychosis group though had lower network size than the healthy group had higher network size than the depression group (though not significant)

- Dyadic Interaction Measures and Mental Health: Dyadic clinical interviews have reported to reveal markers of depression such as in terms of speech pause time and response time. We pursued a first-of-its-kind speech timing analysis from dyadic interactions of free-living using ECoNet adaptation for dyadic interaction detection. Response time but not pause time was correlated significantly with depression severity. Additionally, dyadic interaction frequency showed a potential as depression severity onset detector and depression severity marker in general.

Team

Bishal Lamichhane

Graduate Student, ECE

Rice University

Dr. Ashutosh Sabharwal

Professor

ECE, Rice University

Dr. Ankit PATEL

Assistant Professor

ECE, Rice University

Dr. Nidal Moukaddam

Assistant Professor

Baylor College of Medicine

Papers

Github Codes

Get in touch with Bishal Lamichhane (bishal.lamichhane@rice.edu) for access to a research repository here: https://github.com/lbishal/EcoNet. We are working on a more user-friendly way to invoke ECoNet on your own audio data. Watch out for updates here!